Practice and feedback are essential to effective learning, yet all too often they aren’t given the time and attention they deserve by learning businesses. Practice and feedback are powerful tools for moving a learning experience away from pure theory and content and towards deeper learning and application in the real world.

In this fifth episode in our seven-part series on learning science’s role in a learning business, we feature key insights related to practice and feedback from conversations with learning design experts Myra Roldan, Ruth Colvin Clark, Patti Shank, Michael Allen, and Cathy Moore. We connect their perspectives to highlight the importance of practice and feedback as evidence-based tools and critical components of designing effective learning experiences.

To tune in, listen below. To make sure you catch all future episodes, be sure to subscribe via RSS, Apple Podcasts, Spotify, Stitcher Radio, iHeartRadio, PodBean, or any podcatcher service you may use (e.g., Overcast). And, if you like the podcast, be sure to give it a tweet.

Listen to the Show

Access the Transcript

Download a PDF transcript of this episode’s audio.

Read the Show Notes

[00:21] – To help us explore practice and feedback and what learning science knows about their ability to support learning, we went back to the Leading Learning Podcast archives and found some choice sound bites from Cathy Moore, Michael Allen, and Patti Shank. We also pull in perspectives from Ruth Colvin Clark and Myra Roldan, who were featured in the previous episode in this series about content design.

Myra Roldan

We start with technologist and learning professional Myra Roldan.

A Tenet of Effective Learning

[02:38] – What one aspect or tenet of effective learning do you wish was more broadly understood and supported by those designing and providing learning to adults?

A lot of instruction that you see out there in the world, whether it’s in higher education, workforce development programs, even these free e-learning courses or paid courses that you can take, they really focus on theory. So helping you to understand a concept. Where they fall flat is on this whole concept of hands-on.

Myra Roldan

Myra has a framework that she and her team developed around this concept of application—taking something from theory and being able to apply it in a real-world setting. Every educational initiative she’s evaluated over the years has fallen flat on the application piece, which is really important.

CRAP

[04:20] – There are many models, frameworks, approaches to designing learning, including ADDIE, SAM, and design thinking. Which of those do you tend to find useful, and which would you recommend to others to help them ensure they’re designing effective learning?

ADDIE and SAM are process-driven guidelines and not really a framework for creating learning itself. Myra thinks that design thinking has applications everywhere, but you need to look at whatever solution you’re creating as a product. You need to be able to step out of your comfort zone and work with a group to understand and develop an understanding of who your audience is and what their obstacles are to find solutions for delivering creative learning.

The methodology Myra and her team developed for application and a focus on mastery is called CRAP: concrete, repetition (or representation), abstraction, and practice. You do something concrete, then you repeat a process in a different modality, next you learn the theory about it (abstraction), and, finally, you practice and go through it again.

[07:29] – Myra’s comments drive home the importance of designing practice into a course or other learning experience. Practice is one of the four pillars of the methodology that she and her colleagues have developed for designing learning, and it’s something that we’ve certainly talked a lot about over the years. It’s not enough simply to tell learners that they need to practice. You have to scaffold the practice, you have to build in the opportunities, and you have to set aside time for them to do it.

Ruth Colvin Clark

Ruth Colvin Clark is one of the leading translators of academic research on learning science into practical advice for practitioners. We asked for her advice on how learning businesses might give practice and feedback a substantial role in their learning offerings.

Making Practice and Feedback Part of Your Offerings

[08:33] – We know that practice and feedback are critical to learning and to improving, but they are also areas that get ignored. What suggestions do you have for organizations to make practice and feedback a more serious part of their learning offerings?

Ruth agrees that practice and feedback are two critical areas that often shortchanged. The main reason she’s seen for this is people’s limited time. No matter how complex the knowledge and skills, providers often cram lots of content into a limited time frame, but practice and feedback take time.

I always used to hear, “We have to cover the material.” …That means sometimes just dumping out a lot of information and not really giving people the opportunity to apply that and to see how that, those knowledge and skills, can actually work in their job tasks…. [M]y motto would be “Maybe do less, but do it well.”

Ruth Colvin Clark

Ruth suggests the following:

- Evaluate outcomes to see if you’re learners are applying knowledge. The vast majority of evaluation in most learning settings is the student rating sheet at the end, which can be misleading. (See our related episode “Rethinking a Dangerous Art Form with Dr. Will Thalheimer.”)

- Set standards regarding engagement. After every new topic or chunk of information build in a change for learners to use the knowledge and skills in job-relevant ways.

Learner Engagement

[11:40] – How do you define learner engagement?

Ruth uses the technical term “generative learning.” What techniques are you using to help learners mentally process knowledge and skills? These techniques fall into two major categories: overt techniques, which are behavioral and involve some overt action from the learner, and covert techniques, which involve learners’ mental effort.

[14:16] – Are overt or covert approaches to engaging learners more beneficial in terms of helping with learning transfer?

Ruth says providers want to provide both overt and covert opportunities, but the advantage to behavioral (overt) engagement is you can give feedback because the learner has taken some action. Since covert engagement goes on in learners’ heads, it’s not as easy to provide feedback because you don’t really know what they’ve processed.

[15:19] – Do you have suggestions or ideas for how one can design to help cultivate engagement among learners?

Ruth suggests using self-explanation questions. Research shows that learners who self-initiate their own self-explanation questions are more successful. This would be a technique to teach learners, and it would providers can insert self-explanation questions to encourage learners to put in the effort. To learn more, check out “Why You Should Add Self-Explanation Questions to Multiple-Choice Questions” by Karl Kapp.

Sponsor: SelfStudy

[18:00] – If you’re looking for a technology partner whose platform development is informed by evidence-based practice, check out our sponsor for this series.

SelfStudy is a learning optimization technology company. Grounded in effective learning science and fueled by artificial intelligence and natural language processing, the SelfStudy platform delivers personalized content to anyone who needs to learn either on the go or at their desk. Each user is at the center of their own unique experience, focusing on what they need to learn next.

For organizations, SelfStudy is a complete enterprise solution offering tools to instantly auto-create highly personalized, adaptive learning programs, the ability to fully integrate with your existing LMS or CMS, and the analytics you need to see your members, users, and content in new ways with deeper insights. SelfStudy is your partner for longitudinal assessment, continuing education, professional development, and certification.

Learn more and request a demo to see SelfStudy auto-create questions based on your content at selfstudy.com.

Patti Shank

[19:14] – Patti Shank is the author of the three books in the Deeper Learning series: Write and Organize for Deeper Learning, Practice and Feedback for Deeper Learning, and Manage Memory for Deeper Learning. Below are some highlights from our conversation with her in a previous episode of the podcast:

Focusing on Content at the Expense of Practice and Feedback

Patti says we spend too much time throwing content at people and, as a result, make it harder for them to learn.

Practice is one of those essential and deep elements that is needed for proficiency…. We worry about developing content for people. We don’t worry as much about developing adequate and accurate practice at the level of proficiency they’re at so that we can move them up…. [W]e deliver far too little practice for any level of proficiency—in fact no proficiency…. And we generally deliver the wrong types of feedback to help people become more proficient. And so we actually create problems for proficiency rather than creating proficiency when we do this wrong.

Patti Shank

Patti disagrees with those who dismiss this kind of discussion as not applicable or “academic stuff.” The research may be academic, and it may be hard to understand, but understanding practice and feedback and other nuances of how learning happens is how we get people to where they need to go—faster and with better results.

Types of Feedback

[21:42] – What types of feedback would be helpful in supporting learners to learn?

Patti frames her discussion about feedback (and how nuanced it is) by limiting it to people who have less prior knowledge in the area being taught and by limiting it to electronic feedback (as opposed to one-on-one feedback). She shares research related to three types of feedback:

- KR (knowledge of results) tells learners whether they got the answer right or wrong.

- KCR (knowledge of correct results) tells learners not only whether they got it right or wrong but also what the correct answer is.

- Elaborative feedback explains why, and there’s a whole host of things that can go into this.

The research shows (though, again, this is very nuanced and so doesn’t apply to every situation) that the best feedback for most people is knowledge of correct answer (KCR). The knowledge of results (KR) is not helpful. For people who are brand-new, the research shows that they can’t handle a lot of elaborative feedback.

KCR is also more effective when showing the feedback in the guise of how the questions were originally presented to the learners. Patti discusses the implications of this related to choosing a multiple-choice question system. For more advanced learners, the most helpful feedback is different than for learners with less prior knowledge.

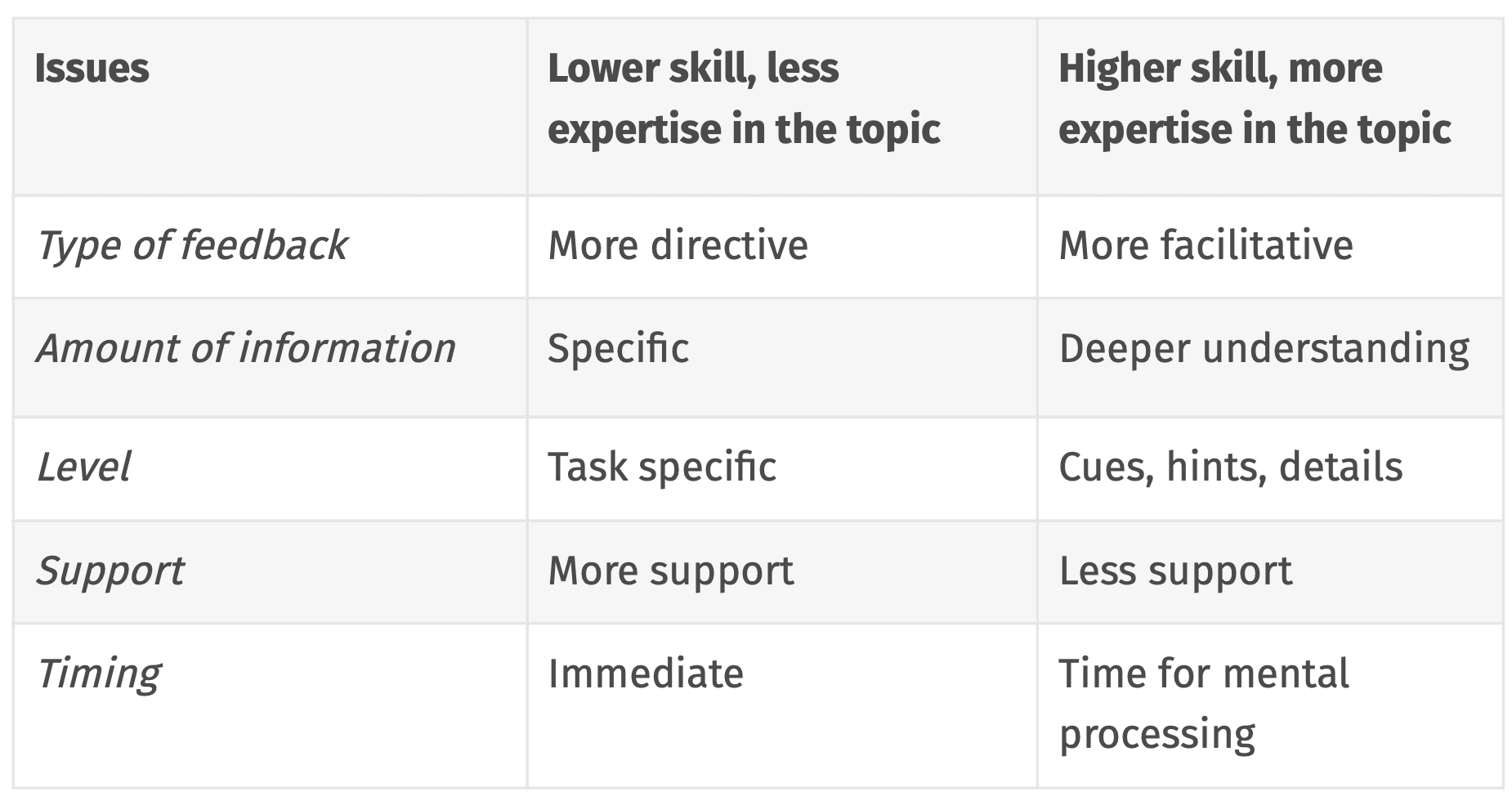

See “Mastering Deeper Learning, Part 2: Feedback” by Dr. Patti Shank for a good summary of how to handle feedback differently at the different ends of the skill and expertise continuum.

Key Takeaways from Patti

[26:15] – We’ve already heard time constraints cited as a big reason why practice and feedback get short shrift. Another big reason is because practice and feedback can be tricky to do well. Patti’s comments show just how truly nuanced feedback is—or how nuanced it should be. A blanket policy of always including elaborative feedback in an e-learning course might actually do learners a disservice if they’re new learners.

Patti’s comments also relate to Myra Roldan’s emphasis on knowing your audience, knowing your customers. Understanding what they already know is going to be key to providing appropriate feedback. The distinction between beginning and more advanced learners is very important—it can be hard for a learning business to navigate, especially if it’s trying to serve a wide audience.

To learn more about feedback, check out Leading Learning Podcast episode 209, “The Art and Science of Effective Feedback.”

In discussing feedback, Patti spoke indirectly about multiple-choice questions. She’s a fan of well-written multiple-choice questions and believes they can be done well, and she’s dedicated time and energy to outlining the best ways to use multiple-choice questions effectively for learning.

Michael Allen

Multiple-choice questions are something e-learning industry pioneer Michael Allen brought up when we spoke with him in a previous podcast episode.

Activity and Feedback in the CCAF Design Model

[28:31] – Michael shared there are almost always four components to an effective learning experience, and those are encapsulated in the CCAF Design Model he developed:

- Context

- Challenge

- Activity

- Feedback

Regarding activity, multiple-choice questions are not what most of us do in real life, and so they often don’t accurately measure what you know. Michael suggests that we instead have people take action or respond with a gesture to tell you what they would do in the face of the challenge. If they can’t do it, then they should ask for support or clarification. If they don’t know they can’t do it and try and fail, then developers and teachers know what instruction to give learners because they’ve seen how they fail.

Related to feedback and what makes the most difference, Michael says it’s not about hearing if you’re right or wrong.

To me, the feedback that makes the most difference is…the feedback that shows me the consequences of what I did. So, if I did things well, I want to see the happy outcomes. If I didn’t do things well, I want to see the consequences of not doing things well because it’s going to help motivate me to learn what I need to and avoid those consequences.

Michael Allen

Cathy Moore

[32:20] – Cathy Moore is another voice we pulled from the archives because her Action Mapping model is very much in keeping with cutting content in favor of increased practice.

Action Mapping

Here’s how Cathy describes the model.

[Action Mapping] is a model that helps us avoid doing information dumps to create more activity-centered training. It starts with the question, “What measurable improvement do we want to see in the organization as a result of this training?” In the case of an association, it would be, “What measurable improvement do our learners want to see in the performance of their organization or in their personal lives?” When you start with that, you can then list what it is that people actually need to do on the job to achieve this change. You avoid jumping immediately to what they need to know and instead list what they need to do—and you ask what makes it hard to do. This leads to things such as practice activities rather than information presentations. In those activities you can link, or provide optionally, the information learners may need. The result is a very activity-focused experience, and the learners have the freedom to pull as much information as they need rather than everybody having to sit through the same information presentation.

Cathy Moore

Why Practice Activities Aren’t the Norm

[34:02] – Why don’t we see more practice activities in learning products?

Cathy explains that the focus on content is a cultural issue.

Education, as most of us experience it, is delivery of information and then testing to see if that information has survived in the memory…. And I think in the world of professional continuing education, that’s really entrenched because we are focusing on certificates or hours that people spend in class rather than what can they do. So it’s a mindset shift—and it’s a scary mindset shift because we’re so familiar with the information-delivery-and-testing model.

Cathy Moore

[35:24] – In this episode, we heard from Myra Roldan, Ruth Colvin Clark, Patti Shank, Michael Allen, and Cathy Moore. All of them are learning designers whose work is well grounded in learning science, and all of them believe practice and feedback to be critical aspects of effective learning.

Follow-Up Assignment

For this episode, we want to offer not a resource but an assignment. A resource is more content. An assignment is a practice opportunity.

- Choose one or more of your learning offerings to audit. Try to find a product that’s representative in some way, maybe not of all that you offer but of a particular product line or a format type.

- In the offering or line of offerings that you choose, ask:

- What feedback and practice opportunities do you offer your learners?

- Did you devote the same time and energy to those practice and feedback opportunities as you devoted to content development?

- Did you use evidence-based approaches to building in practice and providing feedback?

- Based on your informal audit, what changes would you make to how you design and develop offerings in the future?

To make sure you don’t miss the remaining episodes in the series, we encourage you to subscribe via RSS, Apple Podcasts, Spotify, Stitcher Radio, iHeartRadio, PodBean, or any podcatcher service you may use (e.g., Overcast). Subscribing also helps us get some data on the impact of the podcast.

We’d also appreciate if you give us a rating on Apple Podcasts by going to https://www.leadinglearning.com/apple.

We personally appreciate your rating and review, but more importantly reviews and ratings play a big role in helping the podcast show up when people search for content on leading a learning business.

Finally, consider following us and sharing the good word about Leading Learning. You can find us on Twitter, Facebook, and LinkedIn.

[37:43] – Sign-off

Other Episodes in This Series:

- Learning Science for Learning Impact

- Effective Learning with Learning Scientist Megan Sumeracki

- Needs, Wants, and Learning Science

- Designing Content Scientifically with Ruth Colvin Clark and Myra Roldan

Episodes on Related Topics:

Designing Content Scientifically with Ruth Colvin Clark and Myra Roldan

Designing Content Scientifically with Ruth Colvin Clark and Myra Roldan

Leave a Reply